This article is republished from InfoQ, by Wang Yipeng

Data is the carrier of information, the foundation of informatization, digitalization, and digital intelligence, and also the basic material for AI model learning and training. The core of AI is to enable machines to think, learn, make decisions, and solve problems like humans, and its "underlying nourishment" still relies on data. If we organize data into parameters and feed them to AI models, when the parameter scale exceeds 60 billion, "intelligent emergence" occurs, which is the generative AI that everyone is paying attention to today.

However, technical implementation alone is far from sufficient. Therefore, as an integrated foundational software platform built to support the large-scale deployment of AI, Data & AI infrastructure has emerged, with its core goal being to connect the entire chain of data storage, governance, computation, and AI model development, achieving bidirectional empowerment of "Data for AI" and "AI for Data."

From this perspective, many enterprises today believe that AI empowering business simply means deploying Dify privately and purchasing all-in-one machines, which is clearly problematic.

Although model vendors and AI open-source communities have solved algorithm problems, and mainstream chip, computing cloud, and public cloud vendors have jointly solved computing power issues, enterprises still need to focus on: how to do well in private domain data collection, governance, and application, building specialized capabilities for enterprise business on top of GenAI's general capabilities.

Jiang Linquan, CIO of Alibaba Cloud Intelligence Group & Head of aliyun.com, also mentioned in his sharing at AICon 2025 Shenzhen that large model deployment in enterprises includes four key steps, one of which is Execute (promoting data construction and engineering implementation).

Yu Yang, Founder & General Manager of KeenData, stated: "For AI to be deployed in enterprises to serve business analysis and assist decision-making, the most core manifestation is understanding enterprise's own data, depending on the degree of deep integration between enterprise technology and business, and relying on Data Ready and continuous governance system construction."

Jensen Huang, Founder and CEO of NVIDIA, stated that every company owns a "data" goldmine, and enterprises will move from simple data accumulation and usage to higher-level intelligent production. Enterprises will build industry-specific intelligence by training customized models based on their own data, and every enterprise will establish its own AI factory.

This also explains why in 2025, the Data & AI track's popularity is no less than AI Agent development platforms—the latter has already become the focus of global capital attention.

Domestically, the State Council's "Opinions on Deeply Implementing the 'AI+' Action" points out that AI infrastructure needs to be supported by the integration of "data-computing power-algorithm"; meanwhile, departments such as the National Development and Reform Commission, National Data Administration, and Ministry of Industry and Information Technology have intensively issued policies such as "National Data Infrastructure Construction Guidelines" and "Trusted Data Space Development Action Plan," clearly formulating data infrastructure reference architectures aimed at building a data-centric digital economy system.

In July this year, the White House officially released "America's AI Action Plan," focusing on innovation and infrastructure fields, with the plan aimed at driving three major transformations through AI technology: Industrial Revolution, Information Revolution, and Renaissance, building America's AI infrastructure, and comprehensively enhancing national economic competitiveness and people's living standards.

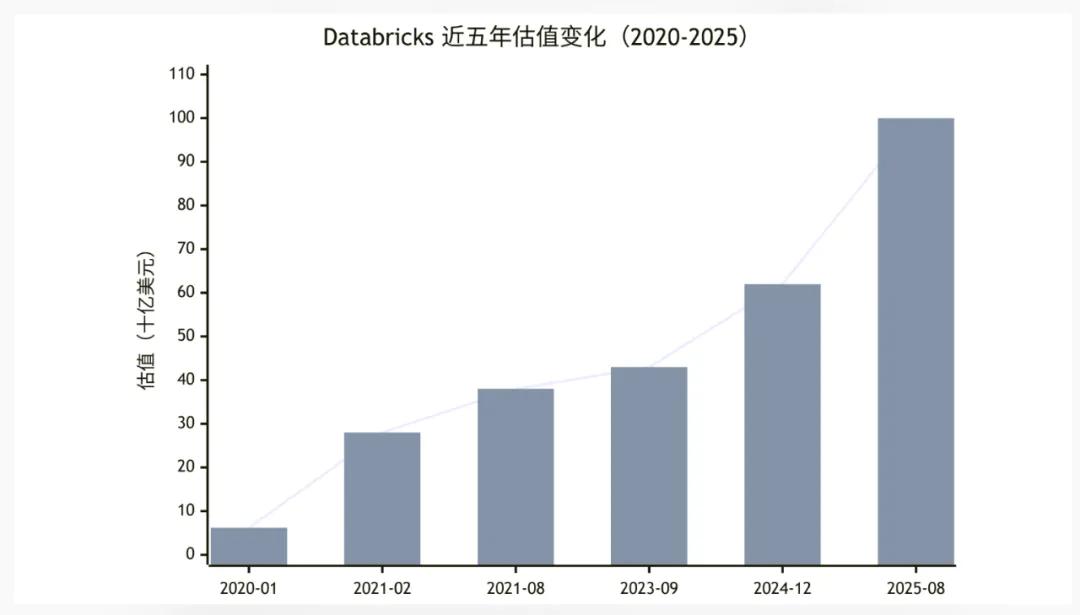

According to reports from Touzhong.com, Databricks announced that it has signed the term sheet for Series K funding, expected to be completed soon with support from existing investors, with this round of funding corresponding to a valuation exceeding $100 billion.

Databricks is a company that doesn't lack money, having just received a total of $10 billion in "the largest venture investment in history" at the end of 2024. As of mid-2025, Databricks' annualized revenue (ARR) is approximately $3.7 billion, with a year-over-year growth rate of 50%. With good financial conditions, raising Series K just six months later, as Databricks CEO himself said, is entirely due to capital being too enthusiastic.

This is the true portrayal of the current global Data & AI market. A survey from Deloitte shows that 28% of AI-leading enterprises are using Data & AI solutions to integrate data and AI to achieve efficient, high-value AI applications.

However, beneath the excitement, track bubbles are also emerging. Simply assembling traditional big data platforms with open-source large model APIs and supporting vector storage, then claiming to have completed "Data & AI upgrade" - this model easily leads to market perception bias and may cause some practical enterprises focused on technological innovation to receive relatively less attention in the bubble.

1 The Real Problem Beneath the Bubble: The Core Contradiction of Data & AI is "Integration"

Data is a key production factor, AI is a new production tool, and to some extent is also becoming a "worker." The combination of the two together becomes part of the new quality productive forces and is also the most important development engine of the current digital economy.

Therefore, Data & AI has been a systematic engineering project from its inception.

Starting from the birth of Apache Hadoop in 2006, solving the core challenges of massive data storage and batch processing; in 2014, the emergence of Apache Spark achieved stream-batch integrated computing, reducing data processing latency from hours to minutes; after 2020, the popularization of Lakehouse architecture broke the disorder of data lakes and the closure of data warehouses, achieving the balance of real-time read-write + structured analysis. During this period, the big data market scale exploded, giving birth to professional vendors like Cloudera and Hortonworks, and also nurturing phenomenal open-source projects like Apache Flink and Iceberg.

Big data field infrastructure and open-source technology ecosystems have been able to stage-wise meet the development needs of the digital economy.

The development in the AI field has been explosive. For the previous fifty years, AI research progress was gentle. Starting from the release of TensorFlow in 2015, AI technology has experienced rapid development within ten years and has now created the industry's largest technological dividend.

However, enterprises quickly discovered that the "deployment bottleneck" of AI models never lies in the algorithms themselves. The "high-quality, high-availability, low-latency" data supply that AI requires cannot be fully satisfied by traditional big data architectures; while the massive data accumulated by big data platforms also needs AI tools to release its value.

Professional vendors who have long focused on Lakehouse technology direction construction have obvious technical advantages.

The comprehensive integration of Data & AI has become inevitable, reconstructing data infrastructure from architecture, process, and scenario levels, enabling data to flow seamlessly to AI models and AI development to be embedded in the entire data lifecycle.

Similar concepts have various expressions domestically. Yonyou calls it "'AI×Data×Process' native integration," Tencent Cloud calls it "Data+AI dual-wheel driven integration," and KeenData calls its product AI-Native Data&AI integrated infrastructure... But everyone's concepts cannot escape the two words "integration," which can be understood from three specific dimensions.

First is architectural integration.

Traditional big data architectures focus on "data storage and computation" as the core, pursuing high throughput and high fault tolerance, but have obvious shortcomings in the low-latency data access, multimodal data processing, and model training resource scheduling that AI requires. For example, AI model training often requires repeatedly reading sample data, and traditional HDFS storage architecture leads to I/O bottlenecks; while the resource scheduling system (YARN) of big data platforms cannot adapt to the dynamic allocation of GPU/TPU resources required for AI training.

The core of architectural integration is building "AI-Native" data infrastructure. Taking Lakehouse architecture as an example, it needs to integrate vector databases (supporting multimodal data retrieval), model serving engines (Model Serving), and dynamic resource scheduling modules on the basis of the original "data lake + data warehouse." It must ensure efficient storage of PB-level data while meeting the requirements of AI models for millisecond-level data reading and elastic computing power scheduling.

Second is process integration.

A common dilemma for enterprises is that after data teams complete collection, cleaning, and governance on the data foundation, they need to export data in CSV or Parquet formats, which are then imported by AI teams into model training platforms; after model training is completed, when deploying to production environments, they need to reconnect to business system data interfaces again. The entire process involves many "manual handling" links, which is not only inefficient but also prone to data inconsistency.

The essence of process integration is achieving tool-based unification of data engineering and AI engineering. Specifically, it needs to cover three stages:

- Data preparation stage: Data governance tools need built-in feature engineering capabilities, supporting automatic extraction of model-required features from raw data without AI teams needing to reprocess;

- Model development stage: AI development platforms need to directly access the asset catalog of the data foundation, supporting real-time invocation of streaming data from data lakes for model iteration;

- Model deployment stage: Platforms need to provide MLOps capabilities, achieving linkage between model deployment, monitoring, rollback, and data quality monitoring—when data quality declines, it can automatically trigger model retraining.

It can be said that achieving architectural integration and process integration is a necessary and sufficient condition for achieving Data & AI platform-level capabilities.

Third is scenario integration.

With the evolution of AI technology, Data & AI application scenarios have moved from single structured data analysis to composite scenarios of "multimodal data + intelligent Agent." For example, automotive companies' intelligent cockpits need to process multimodal data such as voice, images, and sensors, while calling assets like user behavior tags and vehicle fault data; financial institutions' intelligent investment advisory Agents need to connect in real-time with market data, customer position data, and call risk assessment models to generate recommendations. These scenarios require Data & AI platforms to have integrated capabilities of "multimodal data processing + data assetization + Agent development."

However, the difficulty of scenario integration lies in compatibility and scalability. On one hand, platforms need to support unified storage and retrieval of multiple types of data such as text, images, audio, and IoT time-series data, for example, achieving semantic retrieval of unstructured data through vector databases; on the other hand, they need to provide low-code Agent development tools, allowing business personnel to quickly build intelligent applications based on existing data assets, rather than relying on algorithm teams to develop from scratch.

The above triple integration is the foundation for enterprises to establish AI factories, which has been fully validated in actual industry implementation cases. Japan's AEON Group began building its group-wide integrated data infrastructure in 2020.

AEON Group, as Japan's multinational chain supermarket, has very complex business formats and models, involving major retail, health, and finance sectors, with over 500 member companies under the group, and involving AWS and Azure cross-cloud environment applications. Therefore, achieving triple integration is extremely difficult—this means the platform needs to have cross-cloud hybrid deployment capabilities and know how to build sustainable operational data capability systems for large group enterprises, requiring the integration of data foundation platform tools with AEON Group's business and organizational systems.

The reason is that the data warehouse (Azure Synapse) product currently used by AEON cannot meet current operational requirements in terms of computing power or support for intelligent business scenarios. Specifically, it cannot uniformly aggregate data from various business sectors; business indicators are inconsistent, lacking the ability to connect and standardize data, failing to form comprehensive data assets and high-quality datasets and management capabilities that meet AI application requirements; business changes result in lengthy response processes for data tasks, with scattered data of varying quality, lacking AI tools, creating bottlenecks in actual business support for intelligent warehouse logistics allocation and intelligent decision-making.

Through technical selection of domestic and international Data&AI integrated platforms, AEON Group ultimately chose KeenData's platform as its intelligent infrastructure foundation, along with supporting data standard systems and data indicator system design, promoting the construction of data asset systems for multiple business domains including Jasmine Fantasy, overseas shopping, supermarkets, and GMS, forming a group-level Data&AI integrated platform that solved the basic needs of internal leadership for report viewing while laying a solid data foundation for multiple data intelligence scenarios at the group level, such as store location selection, customer profiling, precision marketing, and intelligent supply chain, ultimately meeting AEON Group's platform construction requirements.

Yu Yang, Founder & General Manager of KeenData, believes: "Deploying such a foundation platform in large organizations is not just a matter of product capabilities and technical advancement. More important is a set of work methodologies that ensure continuous data integration and governance, and complete value innovation in combination with business scenarios. Work methodologies give the data foundation platform a soul, make the foundation platform deployable, make the foundation platform a lever for comprehensive enterprise data intelligence deployment, and enable enterprises to systematically deploy AI capabilities and begin to possess native AI capabilities."

Of course, the enterprises participating in AEON Group's project bidding included not only KeenData but also Databricks, Snowflake...

This involves an unavoidable "soul-searching question" in the Data & AI industry: globally, for super-large enterprises with very complex business formats and models, who are the "leading players," and how should we view technical selection, enterprise cooperation, and potential cooperation costs among them?

2 The Dilemma of Selection and Cost: How to Find Reliable Partners in the Data & AI Field?

Based on such target involvement, we can roughly categorize cooperating enterprises in the Data & AI field into three types:

- Traditional big data companies, such as Transwarp Technology, Minglue Technology, Oriental Jinsin, etc.;

- Cloud computing companies, such as AWS, Alibaba Cloud, etc.;

- Data & AI infrastructure platform providers, such as KeenData, Databricks, Snowflake, etc.;

Traditional big data companies have been deeply involved in the industry for many years, with rich experience and certain big data platform projects and customer accumulation. Especially during the pandemic's "health code" project, traditional big data companies accumulated a large amount of project implementation experience. It can be said that traditional big data companies are both core contributors to big data open-source projects and core promoters and beneficiaries of the previous generation of digital transformation waves.

However, it should be noted that after the rise of GenAI, these enterprises are also in a transition period, with AI capabilities mostly externally integrated, i.e., achieving intelligent data querying through calling third-party large model APIs. Currently, they have relatively less implementation experience and cases in Data & AI data infrastructure integrated construction.

Relatively speaking, these enterprises focus more on big data capabilities and are suitable for delivering requirements centered on "data analysis" in the industrial ecosystem, such as sales data analysis for traditional retail enterprises and statistical report generation for government departments.

Cloud computing companies have more complete ecosystems, huge product matrices, rich project experience, wide resource connections, and strong technical capabilities, but weak customization and cost controllability. The advantages of cloud vendors like Alibaba Cloud and AWS in Data & AI solutions lie in the integration of computing power and ecosystems. These vendors can provide full-stack services from IaaS (computing power, storage) to PaaS (data foundation, AI development platforms). Enterprises can directly build systems based on cloud-native architectures without worrying about underlying hardware deployment, and because they can achieve software-hardware collaborative optimization, they have better performance guarantees.

However, the problem is that cloud computing enterprises' attitude toward customization and privatization requirements is almost always "ambiguous." On one hand, cloud is a compound interest business based on standardized products and cannot fully lean toward custom development; on the other hand, whether domestic or international, large enterprises' requirements are almost always "customized." So cloud vendors' products are mostly standardized modules, difficult to adapt to large enterprises' (such as state-owned enterprises, manufacturing) complex business processes and data security requirements.

Additionally, long-term operational costs under cloud models can be very high. Cloud vendors charge based on computing power and storage volume, and more economical pay-as-you-go billing models are not comprehensively covered. When enterprise data scale reaches PB level, annual expenditures may exceed tens of millions, and there is a "vendor lock-in" risk—the cost of migrating to other platforms is extremely high. This is also why emerging computing cloud enterprises gained significant market survival space after the rise of GenAI—they are cheaper and more flexible.

Therefore, cloud computing enterprises are more compatible with small and medium-sized enterprises or internet companies. These enterprises have relatively standardized business processes, high elastic demand for computing power, and can accept long-term dependence on cloud vendors' ecosystems, or have deep cooperation demands with large cloud vendors in channels, overseas expansion, etc.

Data & AI infrastructure platform providers have strong integration capabilities, balancing customization and compliance. Professional vendors represented by Databricks, Snowflake, and KeenData have their core competitiveness in the native integration of Data and AI. These vendors have taken "integration" as their core design philosophy from the beginning, rather than piecing together "big data + AI plugins," therefore they can cover full-chain requirements of architecture, process, and scenarios.

Snowflake's "Snowflake Pattern" Multidimensional Data Model

First, these companies have sufficient technical capabilities. For example, Databricks' Delta Lake can achieve data ACID transactions and version control, Snowflake's storage and compute separation architecture supports on-demand scaling, reducing enterprise costs by 30%-50%, while KeenData's KeenData Lakehouse achieves full-stack domestic innovation adaptation through 97% self-developed code rate, meeting the security and compliance requirements of state-owned enterprises and government.

Second, these companies are "capital darlings" with extremely fast revenue scale growth, rich industry experience, and can provide customized solutions based on business logic from different fields.

Third, Data & AI infrastructure platform providers are different from cloud computing vendors. Modular product matrices can be selected on demand, avoiding unnecessary functional redundancy with controllable costs.

Therefore, Data & AI infrastructure platform providers are suitable for large enterprises with high requirements for "Data & AI integration depth," especially state-owned enterprises, manufacturing, financial institutions, etc. These enterprises not only need data processing and AI development capabilities but also need to meet requirements such as private deployment, domestic innovation compliance, and industry customization.

However, there are also differences among these companies—in North America, SaaS is a good business, making Databricks and Snowflake somewhat more focused on SaaS tool ecosystems rather than platform integration capabilities and service capabilities; while many Chinese enterprises, tempered by the digital transformation wave of the past decade, designed products for giant enterprises from the beginning, with stronger platform capabilities and system capabilities.

OpenAI founder Sam Altman recently stated in an interview that the United States has underestimated the threat of China's next-generation artificial intelligence, and relying solely on chip restrictions is not an effective solution. Altman also stated that competition from Chinese models, especially models like DeepSeek and Kimi K2, is an important reason why OpenAI recently decided to release open-source models.

Wang Wenjing, Chairman and CEO of Yonyou, mentioned in an interview with Xinhua News that China's new generation of enterprise software has become competitive in the global market, and in the digital intelligence era, Chinese enterprise software and intelligent service platforms will lead globally, just like China's mobile internet platforms and new energy vehicles.

Yu Yang, Founder & General Manager of KeenData, also stated: "In the management software era, European and American companies dominated China's large customer market, and Chinese companies had very low profits. After years of digital transformation development, we have new understanding and achievements. For example, in Data & AI infrastructure software, relying on domestic large organizations, enterprises, or government-side construction accumulation, KeenData's data intelligence platform is superior to American Databricks and Snowflake companies' Data SaaS tool combination solutions in terms of enterprise-level and overall capabilities.

In detail, the digital intelligence infrastructure we build consists of a complete set of split/combined software, designed from the beginning with the concept of centralized management and distributed empowerment. It can provide private deployment and complete construction methodologies as well as personnel management suggestions for departments that need to be established, ensuring effective deployment and long-term operation of digital intelligence infrastructure. American companies provide SaaS-level scattered tools with insufficient enterprise-level and holistic thinking, and no deployment suggestions or guidance, requiring customers to combine various Data SaaS/AI SaaS tools themselves.

Digital intelligence infrastructure software, as a representative of China's new quality productive forces, will shine globally, which is also the direction and effort that KeenData is always committed to.

Regarding cooperation costs, enterprises often fall into the misconception of "only looking at initial procurement costs" during selection. In fact, Data & AI platform costs need to cover the full cycle of "deployment-operation-iteration," with significant differences in cost structures among different types of vendors.

Big data vendors and cloud vendors have low initial deployment costs but high long-term deployment costs, especially for large enterprises requiring private deployment, where hidden costs can be very high because cloud vendors' private deployment requires customized modification of underlying architectures. PingCode once stated that according to common domestic industry practices, private deployment subscription prices are usually 2-3 times that of public cloud. Big data vendors need to add new modules and may conflict with existing AI tools, potentially increasing effort and costs.

Data & AI infrastructure platform providers have slightly higher initial deployment costs but are more controllable in the long term. From an iteration cost perspective, modular design is more suitable for business growth and overseas business expansion.This is also closely related to the current overall trend of Chinese enterprises going global.

3 Thinking Beyond Cost: Data & AI Needs to Adapt to Global Markets

Unlike before, in 2025, enterprises confident in their products should best incorporate going global into their core strategic planning. All AI Infra construction must consider this cost.

When Databricks is preparing for IPO and Snowflake's market value exceeds $70 billion, global Data & AI track competition has moved from the technology exploration stage to the ecosystem positioning stage. Chinese challengers like Alibaba Cloud and KeenData have already completed the initial task of "domestic substitution" and begun direct confrontation with overseas leading enterprises in global markets.

For example, since 2016, Saudi Arabia has been fully advancing "Vision 2030," continuously increasing investment in the digital economy field, committed to achieving economic diversification transformation. Saudi Arabia's 2024 annual report shows that as of the end of 2024, 85% of initiatives have been completed or are progressing as planned.

Saudi Arabia's "Vision 2030" Concept Diagram

Chinese enterprises are appearing with high density in this large-scale digital engineering project that will last for more than a decade.

On May 22, 2022, Saudi Telecom, the largest mobile operator in the Middle East, announced that it had established Saudi Cloud Computing Company (SCCC) in Riyadh, Saudi Arabia's capital, together with Alibaba Cloud, YiDa Capital, and Saudi AI Company and Saudi Information Technology Company under Saudi Arabia's Public Investment Fund PIF.

Domestic AI Infra vertical professional vendors represented by KeenData have also successfully reached cooperation agreements with multiple Saudi enterprises and institutions, leveraging their innovative advantages in key technology fields such as Data Fabric, Data Mesh, Active Metadata Management, and DataOps, providing customized Data&AI infrastructure solutions to help local enterprises improve data management and analysis capabilities.

Globalization is becoming a new strategic proposition for all enterprises.

Therefore, for future overseas business development, choosing to cooperate with Data & AI infrastructure platform providers to build AI Infra for better cost control is not a bad strategic technology selection approach.

When complete industrial ecosystems gradually spread from domestic to international markets, they bring better cost-effectiveness, more suitable services, and more adapted products, giving other Chinese enterprises more confidence in going global. Regarding Data & AI platform construction considerations, it is necessary to incorporate future overseas business expansion into planning and consider them together.